Introduction

Artificial Intelligence (AI) stands at the precipice of transforming the world as we know it. From automating mundane tasks to potentially surpassing human cognitive abilities, AI has evolved from a futuristic concept to an integral part of daily life. Its rapid advancement raises an intriguing question: Can the horizon of artificial intelligence be contained, or is it destined to break free from human control? In exploring this question, we must consider not just the technological developments but also the social, ethical, and political dimensions of AI.

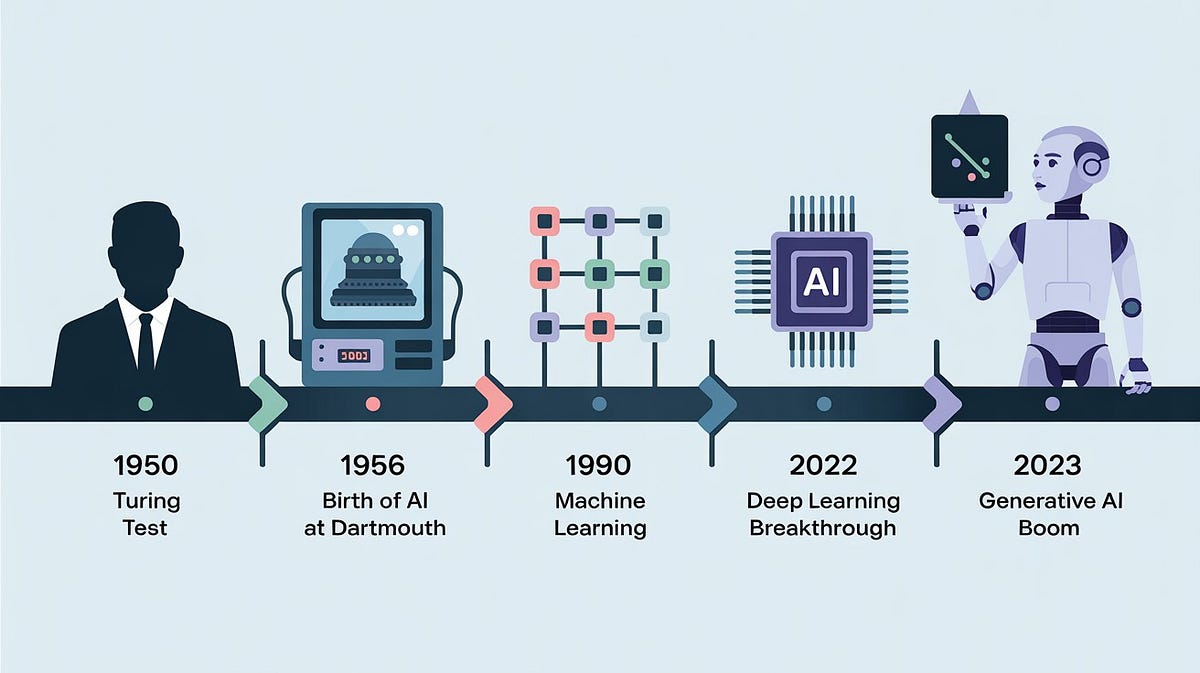

The Evolution of Artificial Intelligence

AI has come a long way since its inception in the mid-20th century. The early days saw simple rule-based systems that followed rigid instructions. Fast forward to today, and we have machine learning (ML) algorithms capable of identifying patterns, making predictions, and even engaging in natural language conversations. The introduction of neural networks, deep learning, and reinforcement learning has dramatically expanded the potential of AI, propelling it into fields as diverse as healthcare, entertainment, finance, and autonomous vehicles.

As AI systems grow more complex and capable, their potential impact on society grows exponentially. But with this power comes the question of control: Can AI be regulated or contained, or will it spiral beyond the bounds of human oversight?

The Boundaries of AI: Can They Be Set?

The Promise of Contained AI

In theory, AI can be contained or constrained within predefined limits. Just as we can program a machine to carry out specific tasks, we could, in principle, design AI systems that operate within strict boundaries. Many AI researchers emphasize the importance of creating transparent, interpretable models that ensure safety and prevent unforeseen consequences. For example, reinforcement learning algorithms can be designed to optimize outcomes within a controlled environment, where the system’s behavior is well-understood and predictable.

Proponents of contained AI argue that by creating strong regulatory frameworks, ethical guidelines, and oversight mechanisms, we can prevent AI from evolving into something uncontrollable. In practice, this could involve limiting the scope of AI applications, requiring third-party audits, or even restricting the development of certain high-risk technologies such as autonomous weapons or superintelligent AI.

The Challenges of Containing AI

While containment seems feasible in the short term, there are inherent challenges to keeping AI under control in the long run. The rapid pace of technological innovation makes it difficult for regulatory bodies to keep up. As AI becomes more integrated into complex systems, its unpredictability increases. Autonomous systems, for instance, might encounter scenarios not foreseen by their designers, leading to unintended outcomes.

Moreover, the global nature of AI development complicates containment efforts. Countries and corporations around the world are racing to develop the most advanced AI technologies, and not all share the same commitment to safety and ethics. While some governments push for regulation, others may prioritize AI development to maintain economic and geopolitical power. This uneven landscape presents a significant obstacle to the containment of AI.

AI’s Infinite Potential: A Double-Edged Sword

The Creative Potential of AI

AI’s potential is not just about efficiency or automation—its creative capacity is arguably its most fascinating feature. In fields like art, music, and literature, AI has demonstrated an ability to generate novel works that rival human creativity. AI-driven tools like OpenAI’s GPT-3 and DALL-E have already shown that machines can write poetry, compose symphonies, and generate stunning visual art from textual prompts.

In the scientific community, AI is being harnessed to accelerate drug discovery, optimize climate models, and even simulate complex biological systems. As AI continues to evolve, it may one day possess the capacity to conduct original research, propose new hypotheses, and solve problems that elude human scientists.

But this creativity comes with a caveat: As AI becomes more capable of generating original ideas, it also becomes more difficult to predict and control. When AI begins producing work that is not directly constrained by human input, the boundaries between human and machine creativity blur. This raises ethical concerns about authorship, ownership, and accountability.

The Dark Side: AI and Unintended Consequences

Despite its many benefits, AI also harbors the potential for disastrous unintended consequences. One of the most significant risks is the development of autonomous systems that operate beyond human control. A prime example is the use of AI in military technologies, such as autonomous drones and lethal autonomous weapon systems (LAWS). These systems could make life-or-death decisions without human intervention, leading to catastrophic outcomes if they malfunction or are misused.

AI can also exacerbate societal inequalities. Machine learning models often inherit biases present in the data they are trained on, leading to biased decisions in areas like hiring, law enforcement, and lending. Without careful oversight, AI systems may perpetuate or even amplify existing injustices.

Another significant concern is the potential for AI to accelerate the displacement of jobs. While automation promises to make certain industries more efficient, it also threatens to disrupt traditional labor markets. In fields ranging from manufacturing to customer service, AI-driven robots could replace human workers, leading to mass unemployment and economic instability.

The Ethics of Containing AI

The Responsibility of Developers

As the architects of AI, developers carry a significant ethical responsibility. The choices they make in designing algorithms, selecting data, and implementing safety protocols can have far-reaching consequences. Ensuring that AI systems are transparent, fair, and accountable is critical to preventing harm.

One of the most important aspects of AI ethics is ensuring that the technology serves humanity’s best interests. AI should be developed with an emphasis on improving quality of life, reducing inequalities, and promoting sustainability. Developers must consider not just technical performance but also the social, cultural, and environmental implications of their creations.

The Role of Regulation

Regulation plays a crucial role in ensuring that AI remains safe and beneficial. Governments, international bodies, and organizations such as the United Nations have all called for the creation of AI regulations. These regulations might include ethical guidelines, transparency requirements, and safety standards. Additionally, the development of AI-specific international agreements could help create a global framework for responsible AI development.

However, regulation is a complex issue. Overregulation could stifle innovation and hinder the development of potentially life-changing technologies. On the other hand, underregulation could lead to unchecked AI development, increasing the risks of harm. Striking the right balance between fostering innovation and ensuring safety is one of the biggest challenges facing policymakers.

The Global AI Race

The global race to develop AI presents another challenge in containing its growth. Countries around the world are competing to establish leadership in AI technology, with nations like China and the United States leading the way. The technological arms race could potentially lead to a scenario where AI systems are deployed with minimal oversight or regulation, as governments prioritize national security and economic power over ethical considerations.

This global competition also complicates the implementation of universal standards and regulations. Countries with differing political systems and economic priorities may have conflicting views on how AI should be governed, making international cooperation difficult. This dynamic could hinder the development of a unified approach to AI regulation, leaving significant gaps in oversight.

The Future of AI: Contained or Unleashed?

So, can the horizon of artificial intelligence be contained? In the short term, containment is possible. With the right frameworks, safeguards, and regulations, AI can be controlled and deployed responsibly. However, the long-term outlook is less certain. As AI becomes more advanced, its potential to surpass human capabilities grows, and with it, the difficulty of containment.

Ultimately, the horizon of AI is not just about technological capability; it is also about human choice. The way we choose to develop, regulate, and integrate AI into society will shape its future. If we focus on creating responsible, ethical AI that aligns with human values, we may be able to harness its potential for good. But if we allow unchecked development and the pursuit of power to guide AI’s evolution, we risk unleashing something beyond our control.

Conclusion

AI is undoubtedly one of the most transformative technologies of our time, and its horizon is vast and ever-expanding. Whether that horizon can be contained depends on our ability to balance innovation with responsibility. By implementing thoughtful regulation, ensuring ethical development, and fostering international cooperation, we can steer AI toward a future that benefits all of humanity. However, we must remain vigilant, understanding that the more powerful AI becomes, the harder it will be to predict, control, or contain.